Micron Technology’s fiscal Q1-2025 earnings report offered two stories: a dramatic surge in data center revenue and a troubling outlook for its consumer-facing NAND business. Despite the strong performance in high-growth areas like AI and data centers, the company’s stock took a sharp dip after hours due to concerns about consumer weakness, especially in the NAND segment.

I’ve owned and recommended Micron for a while now, and even took some profits in June 2024 at $157, when it rose far above what I felt was its intrinsic value. Since it’s a cyclical stock in a commodity cyclical memory semiconductor business, getting a good price is unusually important, and it is crucial to take profits when the stock gets ahead of itself.

Micron’s (MU) stock slumped from $108 on weak guidance for the next quarter, and now at $89, it looks very attractive at this price. I’ve started buying again.

Record-breaking data center performance

Micron reported impressive growth in its Compute and Networking Business Unit (CNBU), which saw a 46% quarter-over-quarter (QoQ) and 153% year-over-year (YoY) revenue jump, reaching a record $4.4Bn. This success was largely driven by cloud server DRAM demand and a surge in high-bandwidth memory (HBM) revenue. In fact, data center revenue accounted for over 50% of Micron’s Q1-FY2025 total revenue of $8.7Bn, a milestone for the company.

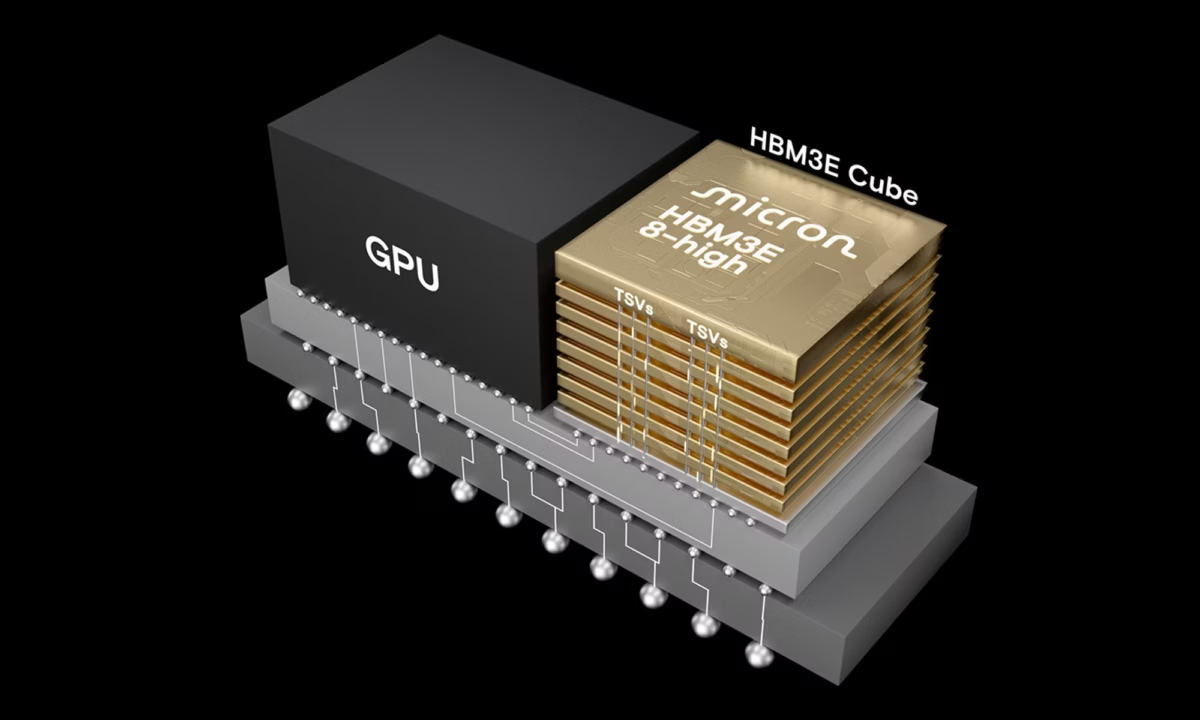

HBM Revenue was a standout, with analysts estimating that the company generated $800 to $900Mn in revenue from this segment during the quarter. Micron’s HBM3E memory, which is used in products like Nvidia’s B200 and GB200 GPUs, has been a significant contributor to the company’s data center growth. Micron’s management also raised their total addressable market (TAM) forecast for HBM in 2025, increasing it from $25 billion to $30 billion—a strong indicator of the company’s growing confidence in its AI and server business.

Looking ahead, Micron remains optimistic about the long-term prospects of HBM4, with the expectation of substantial growth in the coming years. The company anticipates that HBM4 will be ready for volume production by 2026, offering 50% more performance than its predecessor, HBM3E, and potentially reaching a $100 billion TAM by 2030.

Consumer weakness and NAND woes

While Micron’s data center performance was strong, the company’s consumer-facing NAND business painted a less rosy picture. Micron forecasted a near 10% sequential decline in Q2 revenue, to $7.9Bn far below the consensus estimate of $8.97 billion, setting a negative tone for the future. This decline was primarily attributed to inventory reductions in the consumer market, a seasonal slowdown, and a delay in the expected PC refresh cycle – a segment that has also derailed other semis such as Advanced Micro Devices (AMD), and Lam Research (LRCX) among others. While NAND bit shipments grew by 83% YoY, a weak demand environment for consumer electronics—especially in the PC and smartphone markets—weighed heavily on performance.

Micron’s CEO, Sanjay Mehrotra, emphasized that the consumer market weakness was temporary and that the company expected to see improvements by early 2025. The company also noted the challenges posed by excess NAND inventory at customers, especially in the smartphone and consumer electronics markets. In particular, Micron’s NAND SSD sales to the data center sector moderated, leading to further concerns about demand sustainability. The slowdown in the consumer space and the underloading of NAND production is expected to continue into Q3, with Micron’s management projecting lower margins for the foreseeable future due to these supply-demand imbalances.

Micron reported Q1 revenue of $8.71 billion, up 84.3% YoY, and in line with consensus estimates. However, the company’s Q2 guidance of $7.9Bn (a 9.3% sequential decline) was notably weaker than the $8.97Bn analysts had expected. The guidance miss sent Micron’s stock down significantly in after-hours trading.

Financial highlights: Strong margins and profitability, but challenges ahead

Improving Margins: Micron’s gross margin for Q1 came in at 38.4%, an improvement of 3.1 percentage points QoQ, largely driven by the strength of HBM and data center DRAM. However, the outlook for Q2 is less optimistic, with gross margins expected to decline by about 1 percentage point due to continued weakness in NAND, along with seasonal factors and underloading impacts.

Micron’s operating margin for the quarter was 25.0%, ahead of guidance, reflecting the company’s tight cost control and strong performance in high-margin segments like HBM. However, for Q2, Micron expects operating margins to contract, with GAAP operating margin expected to drop to 21.8%.

Profitability also improved significantly with GAAP net income rising 111% QoQ to $1.87Bn, resulting in a GAAP EPS of $1.67, compared to a loss of $1.10 in the year-ago quarter. However, Micron guided for a significant drop in EPS for Q2, forecasting GAAP EPS of $1.26, well below the $1.96 expected by analysts.

Cash flow and capital investments

Micron’s cash flow generation remained robust, with operating cash flow (OCF) increasing by 130% YoY to $3.24 billion, but free cash flow (FCF) was more limited due to significant capital expenditures (CapEx) of $3.1 billion. The company also outlined its intention to spend around $14 billion in CapEx in FY25, primarily to support the growth of HBM and other high-margin data center products. In my opinion, this is a necessity to stay close to SK Hynix and Samsung, its biggest rivals in HBM, who also have a large chunk of the market and can easily match Micron in product improvements necessary to supply to the likes of Nvidia (NVDA). High Capex also increases its ability to scale and improve margins down the road, leading to greater cash generation.

Going home: Micron also announced a $6.1 billion award from the U.S. Department of Commerce under the CHIPS and Science Act to support advanced DRAM manufacturing in Idaho and New York. This partnership aligns with Micron’s long-term growth strategy in the data center and AI segments.

Micron is a bargain

I’m buying the stock with the risk that it could stay range-bound for a few months.

The company’s earnings call reflected a clear divergence in the outlook for its two key segments: data center and consumer electronics. Management sounded confident about data center growth, driven by strong demand for AI-driven applications while providing a more cautious forecast for the consumer NAND business, where inventory corrections and weakened demand are expected to persist through Q2 2024.

I’m very confident about Micron’s continued strength in the data center market, driven by AI and cloud computing, and believe that the prolonged weakness in consumer-facing NAND and PC markets in the short term is an opportunity to buy the stock at a bargain price. The market’s reaction suggests that investors were caught off guard by the unexpected weakness in the consumer business, but this has been persisting as I mentioned earlier with AMD, and Lam Research, and even before

earnings at $109, Micron was a lot below its 52-week high of $158. The further knee-jerk reaction is a boon for the bargain hunter.

For now, I’m not worried if the stock remains range bound – at $89, the downside is seriously limited and its future success will remain squarely on HBM data center demand. Its largest customer Nvidia is forecast to generate $200Bn worth of data center revenue from its Blackwell line and Micron will reap a good chunk of that.

Micron is priced at 13x FY Aug – 2025, with consensus analyst earnings of $6.93, which is forecast to grow to $11.53 in FY2026, a whopping jump of 66%, bringing the P/E multiple down to just 8. Even for a cyclical that’s a low. Besides, Micron is also growing revenues at 28% next year on the back of a 40% increase in FY 2025, which took it soaring past its previous cyclical high of $31Bn in FY2022. With data center revenue contributing more than 50% of the total, Micron does deserve a better valuation.