Marvell Technology (MRVL) $114

I missed buying this in the low 90s, waiting to see if their transformation to an AI chip company was complete. Having a cyclical past, with non-performing business segments made me hesitate, besides far too many promises have been made in the AI space only for investors to be disappointed.

Marvel has been walking the talk, Q3 results in Dec 2024 were exemplary, and guidance even better.

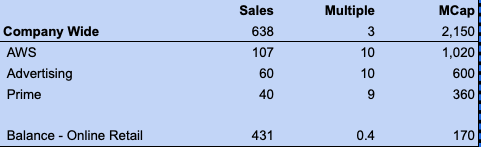

Hyperscaler demand

With a planned Capex of $105Bn for 2025, Amazon confirmed on their earnings call that the focus will continue on custom silicon and inferencing. Amazon and Marvell have a five-year, “multi-generational” agreement for Marvell to provide Amazon Web Services with the Trainium and Inferentia chips and other data center equipment. Since the deal is “multi-generational,” Marvell will continue to supply the released Trainium2 5nm (Trn2) while also supplying the newly-announced Trainium3 (Trn3) on the 3nm process node expected to ship at the end of 2025. Amazon is an investor in Anthropic with plans to build a supercomputing system with “hundreds of thousands” of Trainium2 chips called Project Rainier. The DeepSeek aftermath does suggest a further democratization of AI, as inference starts gaining prominence from 2026.

Critically, like other hyperscalers Microsoft, Meta, and Alphabet, Amazon announced a high Capex (Capital Expenditure) plan of $105Bn for 2025, 27% higher than 2024, which itself was 57% higher than the previous year, for AI cloud and datacenter buildout. It was the last of the big four to confirm that massive AI spending was very much on the cards for 2025.

Here’s the scorecard for 2025 Capex, totaling over $320Bn. A few months back, estimates were swirling for $250 to $275. Goldman had circulated $300Bn in total Capex for the year, and these four have already planned more.

Amazon $105Bn

Microsoft $80Bn

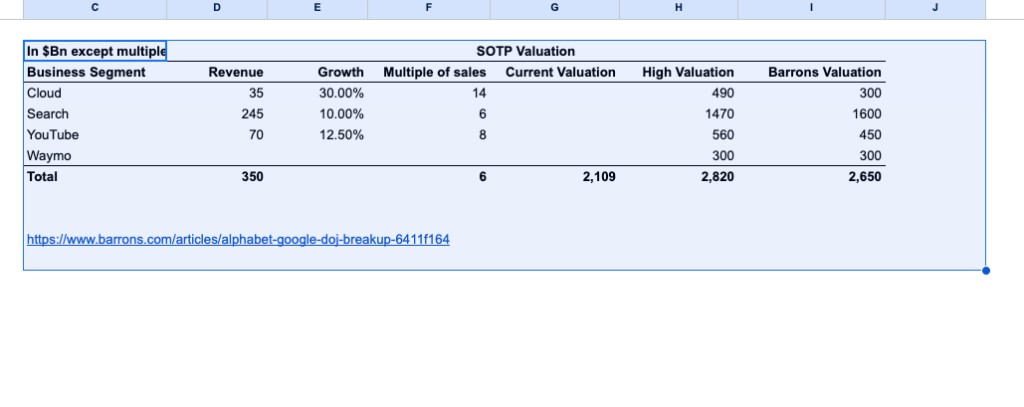

Alphabet $75Bn

Meta $60 to $65Bn

Total $320Bn

The earnings call discussed DeepSeek R1 and the lower AI cost structure that it may presage, with the possibility of lower revenue for AI cloud services.

“We have never seen that to be the case,” Amazon CEO Andy Jassy said on the call. “What happens is companies will spend a lot less per unit of infrastructure, and that is very, very useful for their businesses. But then they get excited about what else they could build that they always thought was cost prohibitive before, and they usually end up spending a lot more in total on technology once you make the per unit cost less.”

Amazon plans to spend heavily on custom silicon and focus on inference as well besides buying Blackwells by the truckload.

Q3-FY2025

Marvell reported impressive Q3 results that beat revenue estimates by 4% and adjusted EPS estimates by 5.5%, led by strong AI demand. FQ3 revenue accelerated to 6.9% YoY and 19.1% QoQ growth to $1.52 billion, helped by a stronger-than-expected ramp of the AI custom silicon business.

For the next quarter, management expects revenue to grow to 26.2% YoY and 18.7% QoQ to $1.8 billion at the midpoint. The Q4 guide beats revenue estimates by 9.1% and adjusted EPS estimates by 13.5%. Management expects to significantly exceed the full-year AI revenue target of $1.5 billion and indicated that it could easily beat the FY2026 AI revenue target of $2.5 billion.

Marvell has other segments, which account for 27% of the business that are not performing as well, but they’re going full steam ahead to focus on the custom silicon business and expect total data center to exceed 73% of revenue in the future.

- Adjusted operating margin – 29.7% V 29.8% last year, and better than the management guide of 28.9%.

- Management guidance for Q4 is even higher at 33%.

- Adjusted net income – $373 Mn or 24.6% of revenue compared to $354.1 Mn or 25% of revenue last year.

- Management has also committed to GAAP profitability in Q4, and continued improvements.

Custom Silicon – There are estimates of a TAM (Total Addressable Market) of $42 billion for custom silicon by CY2028, of which Marvell could take 20% market share or $8Bn of the custom silicon AI opportunity, I suspect we will see a new forecast when the company can more openly talk about an official announcement. On the networking side, the TAM is another $31 billion.

“Oppenheimer analyst Rick Schafer thinks that each of Marvell’s four custom chips could achieve $1 billion in sales next year. Production is already ramping up on the Trainium chip for Amazon, along with the Axion chip for the Google unit of Alphabet. Another Amazon chip, the Inferentia, should start production in 2025. Toward the end of next year, deliveries will begin on Microsoft’s Maia-2, which Schafer hopes will achieve the largest sales of all.”

Key weaknesses and challenges

Marvell carries $4Bn in legacy debt, which will weigh on its valuation.

The stock is already up 70% in the past year, and is volatile – it dropped $26 from $126 after the DeepSeek and tariffs scare.

Custom silicon, ASICs (Application Specific Integrated Circuits) have strong competition from the likes of Broadcom and everyone is chasing market leader Nvidia. Custom silicon as the name suggests is not widely used like an Nvidia GPU and will encounter more difficult sales cycles and buying programs.

Drops in AI buying from data center giants will hurt Marvell.

Over 50% of Marvell’s revenue comes from China, and it could become a victim of a trade war.

Valuation: The stock is selling for a P/E of 43, with earnings growth of 80% in FY2025 and 30% after that for the next two years – that is reasonable. It has a P/S ratio of 12.6, with growth of 25%. It’s a bit expensive on the sales metric, but with AI taking an even larger share of the revenue pie, this multiple could increase.